The Job Applicant That Didn’t Exist

Imagine this: A job candidate aces your coding test, completing it faster than any other applicant. Their resume is flawless, their credentials are top-tier, and their interview answers are polished.

But something feels… off.

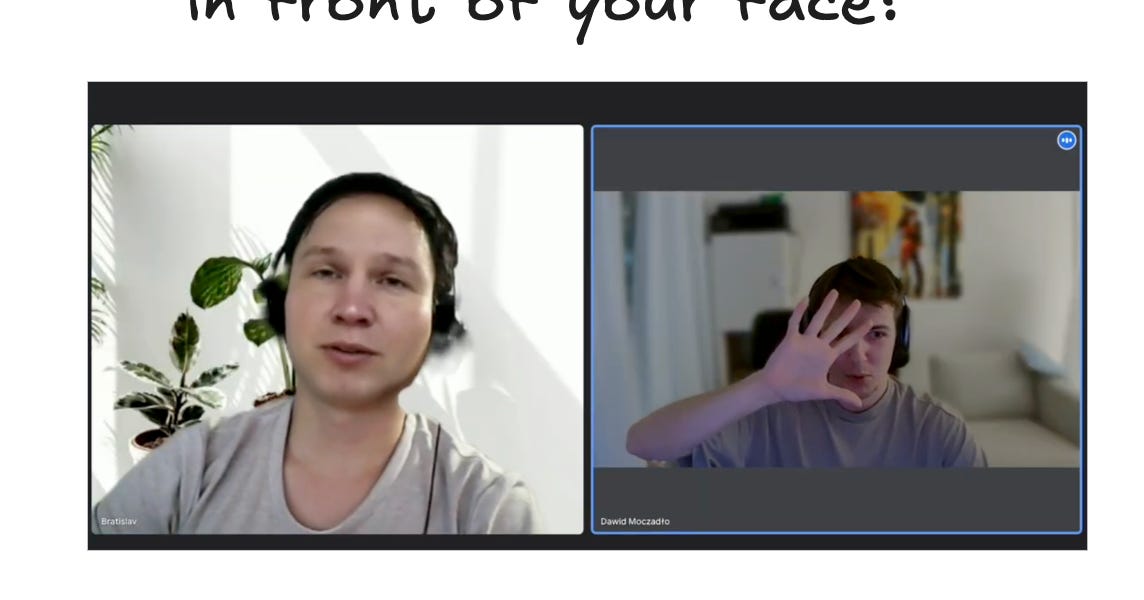

That’s exactly what happened to Vidoc Security, a cybersecurity startup, when they nearly hired a completely fake AI-generated applicant.

During the final video interview, the candidate refused to place their hand in front of their face, a simple request that would have broken their deepfake disguise.

Momentum isn’t always progress, especially when you always end up back where you started.

Fathom helps you escape the loop. With insight, not intuition.

Vidoc caught the fraud just in time. Two months later, it happened again.

They exposed another deepfake candidate who had manipulated their video feed in real-time, fooling multiple rounds of screening.

Take a read of the full postmortem by Gergely Orosz...

And Vidoc isn’t alone.

Hiring fraud is exploding. AI tools allow anyone to fake expertise, while state-sponsored operations like North Korean IT worker scams infiltrate global companies under false identities.

This isn’t just a hiring scam, it’s an existential threat to recruitment.

AI Enters the Hiring Chat (Literally)

Hiring managers have long relied on AI-driven tools to streamline applications, automate screenings, and even analyze candidate speech patterns.

But job seekers have flipped the script.

🔹 Resumes crafted by ChatGPT

🔹 Portfolios designed by Midjourney

🔹 Real-time AI whispering interview answers through earpieces

The result? A credibility crisis.

Who’s truly qualified, and who’s just exceptionally good at using AI?

Even worse, some “candidates” aren’t candidates at all.

Hiring fraud is no longer just about bad actors gaming the system—it’s an international security threat.

Helping HR, talent acquisition, employer branding, and company culture professionals find careers worth smiling about.

Blame the Game, Not the Players

AI-generated candidates may be new, but gaming the hiring system is not.

People have been keyword-stuffing resumes, hiring ghostwriters for cover letters, and inflating job titles for years.

AI just makes cheating faster, easier, and nearly undetectable. So instead of panicking about AI fakers, employers should be asking:

Why does our hiring process reward fakery in the first place?

✅ A buzzword-heavy resume passes through ATS filters.

✅ A candidate who memorized “Top 10 Interview Answers” breezes through an interview.

✅ A technically weak but well-rehearsed applicant outshines a skilled but introverted one.

And now, AI is amplifying these hiring loopholes at scale.

If it’s easy for an AI-assisted job seeker to look like a perfect hire, what’s stopping cybercriminals from doing the same?

North Korean IT Workers: The Global Hiring Fraud You Should Be Worried About

For most companies, hiring fraud is an inconvenience. For some, it’s a national security risk.

While AI-generated resumes and deepfake candidates are concerning, there’s an even more sophisticated operation at play, one that goes far beyond individual job seekers looking to game the system. State-sponsored cybercriminals are using hiring fraud as a tool for financial and intelligence warfare.

According to Google Cloud’s Threat Intelligence report, North Korean IT workers have infiltrated companies worldwide, primarily in tech, finance, and cybersecurity roles. The goal? To funnel millions of dollars back to the DPRK regime while embedding themselves inside Western companies.

Why Is North Korea Targeting Remote Tech Jobs?

🔹 Generating Revenue for the DPRK Regime

- Fraudulent salaries fund weapons programs, cyberwarfare, and sanctions evasion.

🔹 Access to Critical Infrastructure

- DPRK operatives have used stolen credentials to introduce malware and exfiltrate sensitive data.

🔹 Exploiting the Remote Work Boom

- Remote hiring makes it easier to fake identities, manipulate resumes, and evade background checks.

The $6.8M Hiring Scam

A North Korean IT worker facilitator helped fake candidates secure over 300 jobs, using:

✅ Stolen Identities – Real names, fake workers

✅ Laptop Farms – U.S. company laptops rerouted to offshore locations

✅ Multiple Simultaneous Jobs – One person, 5-10 salaries

The total damage? $6.8 million in fraudulent salaries.

How to Spot AI Fakers & Hiring Fraud: Key Red Flags

🚩 1. AI-Optimized Resumes That Cheat the System

- AI-generated resumes mirror job descriptions perfectly.

- Solution: Use AI-detection tools like GPTZero or Originality.ai to flag suspicious applications.

🚩 2. Inconsistent Digital Footprints

- Fake candidates have limited online presence or questionable LinkedIn connections.

- Solution: Cross-check GitHub, LinkedIn, and industry contributions for real engagement.

🚩 3. Suspicious Video Interview Behavior

- AI fakers avoid direct eye contact, use filters, or refuse to perform basic actions (like turning their head).

- Solution: Record video interviews and require candidates to turn off all filters.

🚩 4. Work Experience That Doesn't Add Up

- Fraudulent candidates list non-existent companies or inconsistent job titles.

- Solution: Contact past employers directly instead of relying on LinkedIn.

How Employers Can Defend Against AI Hiring Fraud

Vidoc Security and Google’s cybersecurity team recommend the following strategies to detect and prevent AI-generated candidates:

Implement AI Detection for Resumes

- Use GPTZero, Copyleaks, or Originality.ai to scan for AI-written cover letters and CVs.

Require Live Video Verification

- Conduct real-time video calls, and ask candidates to perform physical actions (like moving their head or touching their face).

Cross-Check Digital Footprints

- Verify social media engagement, GitHub repositories, and past employer references.

Use Identity Verification Tools

- Require notarized identity proof and multi-step background checks.

Monitor Remote Work Access

- Block suspicious VPNs, remote desktop tools, and unusual login patterns.

Educate HR and Hiring Teams

- Train recruiters to spot AI fakers, deepfake interviews, and stolen identities.